You are viewing your 1 free article this month. Login to read more articles.

Preparing for academic AI

If Chat GPT is let loose on academic publishing, we’ll need rigorous checks in place.

In the past few weeks, the chatbot Chat GPT (Generative Pre-Trained Transformer) has been gaining headlines as a possible rival to writers of all kinds, from journalists to academics. Created by Open AI, a company founded in 2015 with investments from Elon Musk and Peter Thiel, the chatbot has been released for public trial. Responding to questions and prompts entered by users, it uses artificial intelligence to generate writing of near-professional quality. Its strength lies in analysing large amounts of data rather than composing imaginative text: when asked to write a poem about winter, the bot produced the rather less-than-impressive lines, "though it may be cold and dark/ It is a season that fills our hearts". Nevertheless, the chatbot can take on the register of academic prose with uncanny credibility and can instantly, for example, write an original abstract for a research paper. This new technology stands to have a huge impact on academic publishing and the peer-review process.

While Chat GPT has been made available for us all to try, little about the software is public knowledge. Unlike previous advances in AI technology, Open AI has not published the technology behind the program in a peer-reviewed journal. However, the company’s ambitions for the technology are extensive and we should be ready to anticipate changes it might cause in the academic writing process. When asked "Will Chat GPT transform academic writing?", the program responded, "GPT could potentially be used to generate drafts of papers or to assist with research by suggesting relevant papers or sources". Chat GPT cannot create new ideas, but it can process any information from the internet prior to 2021 (it was ‘trained’ on information up to and including this year). This means that it could potentially enable researchers to generate papers more quickly and in greater volumes than ever before.

The chatbot can take on the register of academic prose with uncanny credibility and can instantly, for example, write an original abstract for a research paper

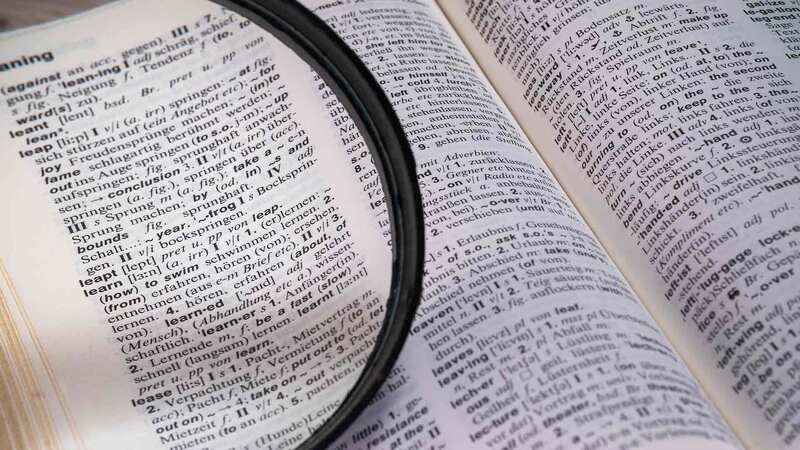

From a publisher’s perspective, this prospect is concerning. Chat GPT is currently unable to distinguish between fake and real information and will readily generate false citations and statistics. Improperly checked and even false research is already a problem in academic publishing, as the site Retraction Watch, which reports on papers that have had to be retracted, has shown. The climate in universities today demands vast quantities of publications in return for the promise of tenure. This pressure has already given rise to papermills, fraudulent organisations that produce and sell fabricated manuscripts to resemble genuine legitimate research. Academic publishers conduct vigorous checks, which increasingly involve the use of software and AI, to detect papermill activity. Researchers who turn to papermills to write their articles pay large sums of money for the service; with the advent of Chat GPT, which is currently free to access, it is likely that some researchers will be tempted to exploit the potential for a cheap and rapid way of generating papers.

Even if well-intentioned researchers use the program as a virtual assistant, avoiding the rigorous process of researching and writing papers is likely to result in poor-quality or even false research. When researchers use research assistants, they enable a productive interchange of ideas between different levels of the academic food chain. Using Chat GPT to create a first draft of a research paper will impoverish the ecosystem of which the published paper forms only a small part. Moreover, with Chat GPT’s cavalier attitude towards facts, the peer-review process could become a complete minefield. Verifying a paper’s findings will become increasingly complicated, particularly since reviewers’ time is already stretched. While it is currently not difficult for a person to spot an abstract written by AI, the software is not far away from becoming able to fool a human reader. Indeed, when MBA students were asked to generate papers using the program at Wharton Business School, the University of Pennsylvania, they passed a screening by Turnitin. This anti-plagiarism software is a vital tool for academic journals when screening submissions: if AI programs can increasingly manage to pass plagiarism checks, they will rapidly become attractive to researchers looking to publish in a hurry.

Academic journals already contend with dishonest tactics in researchers’ attempts to publish large quantities of work. With this new technology, we may soon have to re-evaluate the editorial process to ensure that the quality of academic publishing is maintained. As the task of verifying authorship becomes increasingly complex, new strategies and technologies will be needed to help editors and reviewers identify when they are reading a computer-generated text. Data science is already an important tool in screening for papermills, helping editors to spot instances of unusual co-authorship or duplicate submissions. Further such checks, focused on verifying studies and citations, will become essential. We must remain informed and vigilant about AI writing software as it continues to develop, so that the quality of academic publishing can be maintained.